Making a multiplayer game with Go and gRPC

tl;dr here’s the repo https://github.com/mortenson/grpc-game-example

Recently I’ve started to pick up a new programming language, Go, but have struggled to absorb lessons from presentations and tutorials into practical knowledge. My preferred learning method is always to work on a real project, even if it means the finished work has loads of flaws. I’ve also been reading more about gRPC, which is useful to know professionally but also has a nice Go implementation. With all this in mind, I decided to create a multiplayer online game using Go and gRPC. I’ll go over what I ended up in this blog post, but keep in mind that I’m only ~3 months into Go and have never made a multiplayer game before, so design mistakes are more than likely.

A summary of the game

The finished product here was “tshooter” - a local or online multiplayer shooting game you play in your terminal. Players can move in a map and fire lasers at other players. When a player is hit, they respawn on the map and the shooting player’s score is increased. When a player reaches 10 kills, the round ends and a new round begins. You can play the game offline with bots, or online with up to eight players (but that limit is arbitrary).

Building a game engine

While there are many nice game engines for Go, the whole point of this project was to learn so I figured building on top of a huge abstraction wouldn’t be the best use of my time. I read a bit about game engine paradigms like the “Entity Component System” pattern, and decided to build something that had some abstractions but didn’t go too overboard since the scope of what I wanted to accomplish was relatively small.

I created a “backend” package for my project, which provides a “Game” struct that holds information about the game state:

type Game struct {

Entities map[uuid.UUID]Identifier

gameMap [][]rune

Mu sync.RWMutex

ChangeChannel chan Change

ActionChannel chan Action

lastAction map[string]time.Time

Score map[uuid.UUID]int

NewRoundAt time.Time

RoundWinner uuid.UUID

WaitForRound bool

IsAuthoritative bool

spawnPointIndex int

}

I also came up with a way to communicate game events by using a “Action” and “Change” channel. Actions are requests to change game state, and may not be accepted by the game. For instance, when a player presses an arrow key, a “MoveAction” is sent to the change channel with the following information:

type MoveAction struct {

Direction Direction

ID uuid.UUID

Created time.Time

}

In a separate goroutine, the game receives actions and performs them:

func (game *Game) watchActions() {

for {

action := <-game.ActionChannel

if game.WaitForRound {

continue

}

game.Mu.Lock()

action.Perform(game)

game.Mu.Unlock()

}

}

Each action implements the Action interface, which contains a Perform method that may change game state. For instance, the MoveAction’s Perform method will not move an entity if any of the following are true:

- A player moved too recently

- A player is trying to move into a wall

- A player is trying to move into another player

With this abstraction in place, things outside of the game can send any number of actions without worrying about whether or not that Action will actually work. This lets players spam keys on their keyboard, or clients spam requests to the server, without negative consequences.

When an action is performed and actually changes game state, a Change struct is sent on the change channel, which can be used to communicate changes to clients/servers. When a player is moved as a result of an action, this struct is sent:

type MoveChange struct {

Change

Entity Identifier

Direction Direction

Position Coordinate

}

If something changes game state directly without using an action, no change is fired. This is important because now the client/server (which we’ll get into later) knows exactly what they need to communicate.

When a laser is fired by a player, the resulting change is more abstract:

type AddEntityChange struct {

Change

Entity Identifier

}

I made this decision because if I add new weapons, or the player gets the ability to create static objects, AddEntityChange could be reused. Since in the end this change is only used for new lasers, it feels quite awkward in the codebase. This is probably an example of over-abstracting things too early.

The action => change flow is half of how the game engine works, with the other half being another goroutine that watches for entity collisions. Entities in the engine are anything on the map that isn’t completely static, which for now are players and lasers. The collision loop is quite long so I won’t go into the code, but it does the following:

- Create a map of coordinates (X/Y pairs) to sets of entities.

- For each map item, check if more than one entity exists. If so, that means a collision took place.

- Check if one of those entities is a laser.

- For each colliding entity, check the entity type. If a laser collides with a laser it is destroyed. If a laser collides with a player the player is respawned and their score is incremented.

- Check if the player score is high enough to queue a new round - if so change the game state accordingly and inform listeners on the change channel.

- Remove lasers that hit walls This happens later on since walls aren’t entities, they’re a part of the game map.

The collision function is one of the longest in the codebase, and is probably the messiest. Maybe with more entity types you could abstract what happens when two entities collide, like the laser could be “lethal” and the player could be “killable”, but it didn’t feel worth it when writing this.

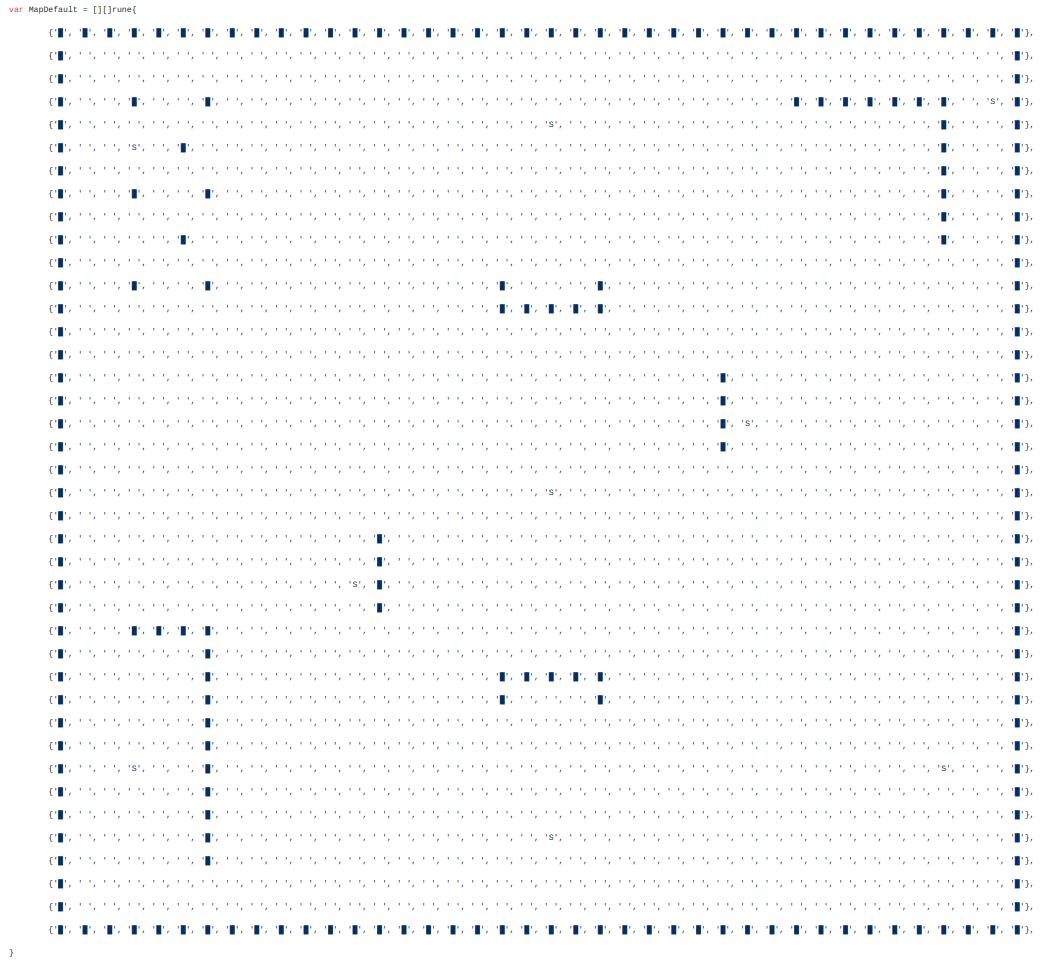

For the game map, I went with a two dimensional set of runes, which define where walls and spawn points are located. The default map for the game looks like this:

That's about all the game engine does - consume actions, send changes, and track collisions. The game is completely abstracted away from the frontend and the networking model, which I’m really happy with. I think my biggest regret here is that because there are so many consumers of game state, the codebase has a lot of mutex locking. If I wrote this as one game loop and not asynchronously maybe this could have been avoided, I’m not sure which is more maintainable.

A terminal frontend

For the frontend of the game, I used tview, a project that lets you quickly make terminal user interfaces with Go. This game doesn’t have a complex user interface, but tview provided a lot of useful features for me:

- If I wanted to add in forms later, tview would be essential for a good user experience.

- Its layout tools allow the game “viewport” to be flexible - adjusting to terminal resizes and the presence of other screen content (like borders, help text, modals).

- Building something with the terminal lets me skip the artistic part of game development, which for me takes the most time.

The design of the frontend isn’t remarkable or complex - in fact that part of the codebase is the least “architected” compared to other packages. For displaying game state, the viewport is redrawn at around 60 frames per second, and literally loops through the game’s entities and map to output ascii characters at the appropriate position.

One interaction I like in the frontend is the camera movements when the screen is smaller - there’s a dynamic threshold of how far the player can travel from the center of the screen before the camera starts scrolling, which allows the game to be played on any terminal size.

Beyond displaying game state, the frontend also handles user input. As outlined above, when the user presses the arrows keys a MoveAction is sent to the backend, and when they press any WASD key a LaserAction is sent. The frontend never writes game state directly, and contains almost no state itself.

gRPC protobuf design

With the backend and frontend in place, the game was fully functional offline. Next I had to outline the .proto file for the game, and decide how the client and server would work.

After a lot of iteration, I decided to split the game into two gRPC services:

service Game {

rpc Connect (ConnectRequest) returns (ConnectResponse) {}

rpc Stream (stream Request) returns (stream Response) {}

}

“Connect” would allow clients to request to be added to the game. Once their connection was accepted, they could start a new bi-directional stream with the server. The connect messages are:

message ConnectRequest {

string id = 1;

string name = 2;

string password = 3;

}

message ConnectResponse {

string token = 1;

repeated Entity entities = 2;

}

ConnectRequest contains an ID (the UUID of the player), the player name, and the server’s password. Once connected, the server responds with a token and a set of entities, which lets you join with the initial state of the game intact.

In the first iteration of the design, everything happened in the stream, including the request to connect. This worked, but meant that you could open unlimited streams to the server with no authentication, and the server would have to wait on the connect request which is costly. While I didn’t test this, I would imagine that you could open enough streams to overload the server, or hit some connection or thread limit. Having the connect request separate felt more secure to me, although security was not a primary concern with this project.

The stream messages look like this:

message Request {

oneof action {

Move move = 1;

Laser laser = 2;

}

}

message Response {

oneof action {

AddEntity addEntity = 1;

UpdateEntity updateEntity = 2;

RemoveEntity removeEntity = 3;

PlayerRespawn playerRespawn = 4;

RoundOver roundOver = 5;

RoundStart roundStart = 6;

}

}

The requests and responses mirror game actions and changes, which makes the integration between the game engine and the client/server feel natural. Note that the use of “oneof” here is, as far as I’m aware, the only way to use multiple message types with gRPC streaming. While it seems OK in the .proto file, I found this cumbersome to deal with in the implementation.

I don’t want to go too deep into the messages, but I can show two examples. First, here’s the message for move requests:

enum Direction {

UP = 0;

DOWN = 1;

LEFT = 2;

RIGHT = 3;

STOP = 4;

}

message Move {

Direction direction = 1;

}

As shown here, clients send the most minimal requests possible to the server. The server already knows who the player is and what time the request came in, so we don’t need to mimic the MoveChange struct exactly.

When a move message comes in, a goroutine that watches for stream requests receives it and creates a MoveChange to send to the server. That code looks like this:

func (s *GameServer) handleMoveRequest(req *proto.Request, currentClient *client) {

move := req.GetMove()

s.game.ActionChannel <- backend.MoveAction{

ID: currentClient.playerID,

Direction: proto.GetBackendDirection(move.Direction),

Created: time.Now(),

}

}

Note the “proto.GetBackendDirection” method here - I found that I had to transform game engine structs to proto structs (and vise versa) so often that I needed to create helpers that would do the conversion for me. This is used even more heavily with server responses that send new and updated entities to clients.

For another example, here’s the proto messages used for adding lasers:

message Laser {

string id = 1;

Direction direction = 2;

google.protobuf.Timestamp startTime = 3;

Coordinate initialPosition = 4;

string ownerId = 5;

}

message Entity {

oneof entity {

Player player = 2;

Laser laser = 3;

}

}

message AddEntity {

Entity entity = 1;

}

Because the game engine abstracts entities, the proto file needs to as well. The helper method for converting a game laser to a proto laser, which is used in this message, is:

func GetProtoLaser(laser *backend.Laser) *Laser {

timestamp, err := ptypes.TimestampProto(laser.StartTime)

if err != nil {

log.Printf("failed to convert time to proto timestamp: %+v", err)

return nil

}

return &Laser{

Id: laser.ID().String(),

StartTime: timestamp,

InitialPosition: GetProtoCoordinate(laser.InitialPosition),

Direction: GetProtoDirection(laser.Direction),

OwnerId: laser.OwnerID.String(),

}

}

These helper methods can convert to/from proto structs for players, lasers, coordinates, and directions. It seems like a lot of boilerplate to write to use gRPC, but made my life a lot easier in the long run. I considered using proto structs for the game engine as well to make this easier, but I found them hard to work with and almost unreadable when using “oneof” because of all the nested interfaces. I think this is more of a Go problem than a gRPC one.

An authoritative server

Talking about the messages above give an idea of the kind of work the server and client have to do, but I’ll go over some snippets that I find interesting.

The shooter server is an authoritative game server, which means it runs a real instance of the game and treats client requests like user input. As a result, the server integration with the game engine is close to the frontend’s, in terms of how actions are sent.

As mentioned above, when a client connects it sends over some basic information, which when validated is used to add a new player to the game, and to add a new client to the server. Here’s what the server structs look like:

type client struct {

streamServer proto.Game_StreamServer

lastMessage time.Time

done chan error

playerID uuid.UUID

id uuid.UUID

}

type GameServer struct {

proto.UnimplementedGameServer

game *backend.Game

clients map[uuid.UUID]*client

mu sync.RWMutex

password string

}

The server maps connection tokens to clients, and each client holds an instance of that client’s stream server (which is set when they start streaming). Storing a map of clients allows the server to broadcast messages to all clients when game state changes, which is done using this method:

func (s *GameServer) broadcast(resp *proto.Response) {

s.mu.Lock()

for id, currentClient := range s.clients {

if currentClient.streamServer == nil {

continue

}

if err := currentClient.streamServer.Send(resp); err != nil {

log.Printf("%s - broadcast error %v", id, err)

currentClient.done <- errors.New("failed to broadcast message")

continue

}

log.Printf("%s - broadcasted %+v", resp, id)

}

s.mu.Unlock()

}

A typical connect/broadcast flow looks like this:

- Player1 makes a connect request

- The server add the player to the game, adds a new client, and responds with a token

- Player1 uses the token to open a new stream

- The server starts a loop to watch for messages, and stores the stream server in the client struct

- Player2 follows steps 1-4

- Player1 makes a move request on the stream

- The server sends a move action to the game engine

- The game engine accepts the action and sends a move change

- The server sees the move change, and broadcasts an UpdateEntity response containing Player1’s new position to all connected clients

- Player1 and Player2 receive the message and update their local instance of the entity

Typically in multiplayer games, servers have a period of time where they accept requests, change game state, then broadcast all state changes at once to all clients. This “tick” limits how many requests the server sends, and prevents players with faster connections from having a huge advantage. It also lets you more easily calculate ping. The server I built for this game broadcasts changes as soon as they happen, which is probably a design mistake. I don’t think I can quantify what negative effects it has, but it’s certainly not efficient.

One other issue I found in my design, of course after I was done with this example project, is that there may be cases where a client is broadcasted a response in more than one goroutine at once. According to the gRPC docs (https://github.com/grpc/grpc-go/blob/master/Documentation/concurrency.m…) this is not safe. Using a normal tick to send updates would have avoided this issue.

A (relatively) simple client

In terms of its gRPC integration, the client is similar to the server. It streams responses, converts those responses to structs the game engine can understand, and updates game state directly.

I do have one interesting thing to call out - since the client and the server run their own version of the game engine, high latency could land you in sticky situations as the round comes to a close. For example:

- Player1 shoots a laser at Player2, and sends a laser request to the server

- Player1’s local game thinks the laser hit Player 2 and acts like Player2 was killed, which could end the round

- The server gets the laser request, but sees that Player2 moved out of the way, so no collision happens

Now Player1’s game state is dangerously desynchronized from the server state. Recovering from this would mean getting the real score from the server, knowing the round isn’t over, and that Player2 wasn’t hit.

To work around this, I added an “isAuthoritative” boolean to the game engine. If a game isn’t authoritative, players are never killed when lasers collide with them. In the high latency situation above, Player1 would see the laser hit Player2, but nothing would happen until the server confirmed that Player2 was hit. This is the only part of the game engine I had to directly modify to support multiplayer.

Bonus - bots

Testing the game by myself was getting boring, so I decided to throw together a simple bot system that was compatible with the game. Bots run in a goroutine that watches for game changes, and sends actions to the game engine in the same way the frontend does.

The algorithm for the bots is straightforward:

- Get a map of all player positions

- See if any player can be shot - in other words if a player is in the same row or column as the bot, and not walls are in between them

- If a player can be shot at, fire a laser

- If no player can be shot at, find the closest player to the bot

- Use an A* algorithm to determine a path between the bot and the player. I used https://github.com/beefsack/go-astar which worked great

- Move on the path towards the player

This worked well with one player and one bot, but I found that when bots faced off they would get stuck in loops of always moving or always shooting:

To work around this, I added in some lazy code to have bots randomly move in one direction ~40% of the time. This let them break out of loops and made them harder to predict, although I’m sure there are better ways to make bots smarter. Here’s them after the change:

What I really liked about building this is that it proved that the game was abstract enough to allow for something as seemingly complex as bots to be added without any refactoring. Additionally, I found that I could run a bot locally and connect it to a game server with no issues - so you could have a fully networked game of bots running on separate machines.

Having a strict separation of frontend, backend, server, client, and bot goroutines allows for a lot of flexibility, but has downsides.

While we’re talking about concurrency…

Since I made this engine from scratch, and was in many ways using this as a project for me to learn Go, I ended up not following a lot of game programming patterns. For instance, there is no “main” game loop. Instead, there are many independent goroutines with different tickers. For instance, a client in a networked game has these goroutines:

- Frontend goroutine to redraw the game

- Frontend goroutine to watch for app closures

- Backend goroutine to watch the action channel

- Backend goroutine to watch for collisions

- Client goroutine to watch the change channel

- Client goroutine to receive stream messages

All of these goroutines access the game state, and as a result all need to lock the game’s mutex to ensure there are no race conditions. Because there’s so much locking, it’s hard for me to say for sure that the game is free of deadlocks (although I’ve tested it quite a bit). I really like that each part of the game is separated, but how much easier would things be if I consolidated the goroutines and created something closer to a traditional game loop?

The finished product

In the end, I’m happy with what I accomplished and think that this was a good stress test for my Go skills, and for gRPC as a technology. I think I’ve learned a lot of lessons here and want to give a larger, more complex multiplayer terminal game a shot. I think moving to using tick-style updates, less goroutines (or simpler locking, or more channels), and having a game that’s less reaction based would work out well. Here’s a short GIF showing the full experience of launching a server and connecting to it from a client:

You can check out the code for this project at https://github.com/mortenson/grpc-game-example, and can download binaries in the releases page. As I mentioned I’m new to Go, so please be gentle when reviewing my work!